President’s Council of Advisors on Science and Technology Release Report to the President on the Validity and Reliability of Forensic Feature-Comparison Methods in Criminal Courts

“Some fields of forensic expertise are built on nothing but guesswork and false common sense.”- Judge Alex Kozinski, Ninth US Circuit Court of Appeals

On September 20, 2016, the President’s Council of Advisors on Science and Technology (PCAST) released a report that calls into question the scientific underpinnings of most of the forensic disciplines used in the investigation and prosecution of crime. The report, Forensic Science in Criminal Courts: Ensuring Scientific Validity of Feature-Comparison Methods, comes in response to a 2015 query from President Obama as to whether there are additional science-based steps that could help ensure the validity of forensic evidence used in the U.S. legal system.

On the panel of legal experts who provided guidance on factual matters relating to the interaction between science and the law were NJC faculty members Professor David Faigman (U.C. Hastings College of Law) and Judge Andre Davis (U.S. Court of Appeals, Fourth Circuit).

The study that led to the report involved independent reviews of cases using numerous types of comparison methods – hair analysis, bullet comparison, bitemark comparisons, tire and shoe tread analysis, and the like. Case reviews revealed that many successful prosecutions relied in part on expert testimony from forensic scientists who had told juries that similar features in a pair of samples taken from a suspect and from a crime scene implicated defendants in a crime with a high degree of certainty. In other words, the reviews found that expert witnesses overstated the probative value of their evidence, going far beyond what the relevant science could justify.

According to the report, the emergence of DNA analysis in the 1990s led to serious questioning of the validity of many of the traditional forensic disciplines. The questions that DNA analysis had raised about the scientific validity of traditional forensic disciplines and testimony based on them led, naturally, to increased efforts to test empirically the reliability of the methods that feature-comparison disciplines employed. PCAST identified two gaps: (1) the need for clarity about the scientific standards for the validity and reliability of forensic methods and (2) the need to evaluate specific forensic methods to determine whether they have been scientifically established to be valid and reliable. The study aimed to help close these gaps for a number of forensic feature-comparison methods—specifically, methods for comparing DNA samples, bitemarks, latent fingerprints, firearm marks, footwear, and hair.

The 174-page report includes recommendations to the judiciary, which may be found on page 142. Although the recommendations are tailored for federal judges, other judicial officers can glean information about decision-making on matters pertaining to forensic evidence and expert testimony. Of note:

(A) When deciding the admissibility of expert testimony, federal judges should take into account the appropriate scientific criteria for assessing scientific validity including:

(i) foundational validity, with respect to the requirement under Rule 702(c) that testimony is the product of reliable principles and methods; and

(ii) validity as applied, with respect to requirement under Rule 702(d) that an expert has reliably applied the principles and methods to the facts of the case.

(B) Federal judges, when permitting an expert to testify about a foundationally valid feature-comparison method, should ensure that testimony about the accuracy of the method and the probative value of proposed identifications is scientifically valid in that it is limited to what the empirical evidence supports. Statements suggesting or implying greater certainty are not scientifically valid and should not be permitted. In particular, courts should never permit scientifically indefensible claims such as: “zero,” “vanishingly small,” “essentially zero,” “negligible,” “minimal,” or “microscopic” error rates; “100 percent certainty” or proof “to a reasonable degree of scientific certainty;” identification “to the exclusion of all other sources;” or a chance of error so remote as to be a “practical impossibility.”

One recommendation is that judges should have resources developed for them on these issues. The National Judicial College is ahead of this curve: In 2014, we obtained a grant from the Laura and John Arnold Foundation to develop a series of self-study modules on forensic evidence in criminal cases. A multi-disciplinary panel of content experts and NJC faculty developed the content for the modules. They will be released to the public mid-October 2016; please look for the registration link in the October 2016 Judicial Edge.

To view the full report, click here.

Additional materials can be found here.

The NJC offers many courses related to evidence, including:

- Scientific Evidence and Expert Testimony, Sep. 26-29, 2016 (Clearwater, FL)

- Trial Advocacy and Evidence for Tribal Prosecutors, Nov. 14, 2016 (Reno, NV)

- Advanced Evidence, Nov. 14-17, 2016 (Scottsdale, AZ)

- Evidence Challenges for Administrative Law Judges, Oct. 3- Nov. 18, 2016 & Mar. 13 – Apr. 28, 2017 (Web Course)

- Selected Criminal Evidence Issues, Feb. 20 – Apr. 7, 2017 (Web Course)

- Fundamentals of Evidence, Apr. 24 – Jun 9, 2017 (Web Course)

- Evidence in a Courtroom Setting, Jun. 26 – 29, 2017 (New Orleans, LA)

- Advanced Evidence (JS 617), July 10 – 13, 2017 (Reno, NV) and Oct. 30 – Nov. 2, 2017 (Charleston, SC)

The Hon. Leslie A. Hayashi (Ret.), a retired district court judge from the First Circuit in Honolulu, Hawai...

The National Judicial College has named Dean Aviva Abramovsky as its next president and chief executive off...

Ernest C. Friesen, Jr., the first dean of The National Judicial College, passed away on December 11, 2025, ...

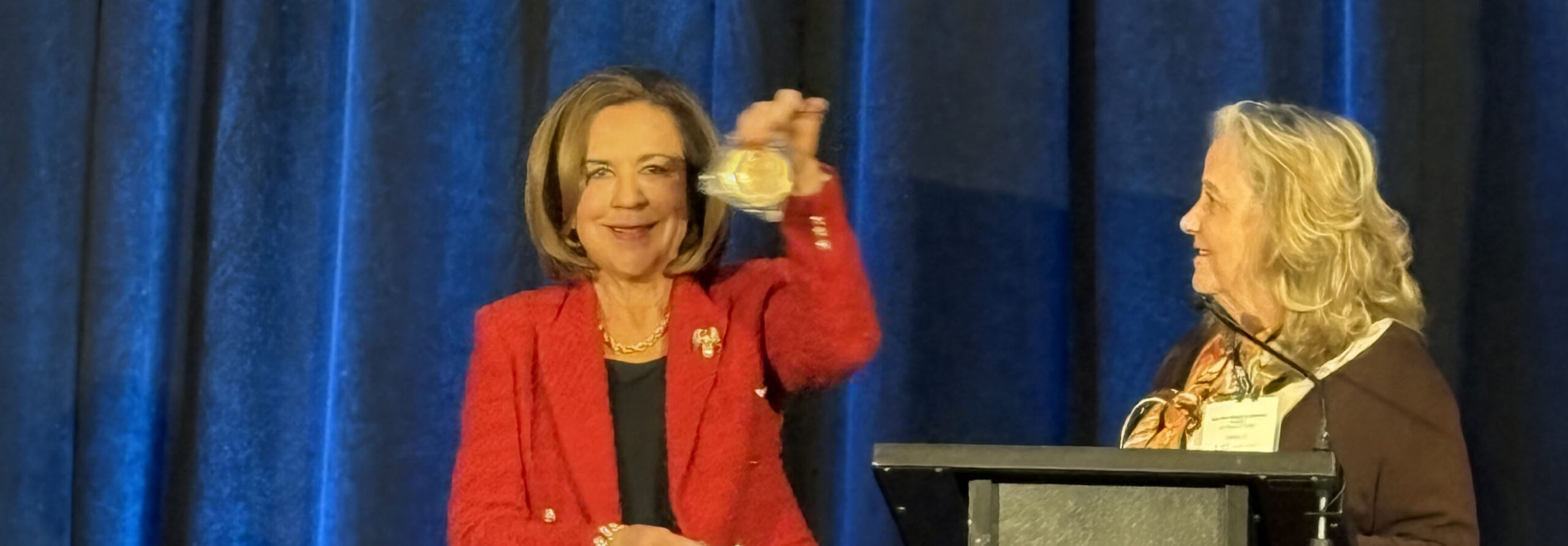

The National Judicial College has awarded Missouri Supreme Court Judge Mary Russell with the Sandra Day O�...

Emeritus Trustee Bill Neukom (left) with former Board of Trustee Chair Edward Blumberg (right) at the NJC 60...